A fundamental component of motion modeling with deep learning is the pose parameterization. A suitable parameterization is one that holistically encodes the rotational and positional components. The dual quaternion formulation proposed in this work can encode these two components enabling a rich encoding that implicitly preserves the nuances and subtle variations in the motion of different characters

Paper Video CodeAbstract

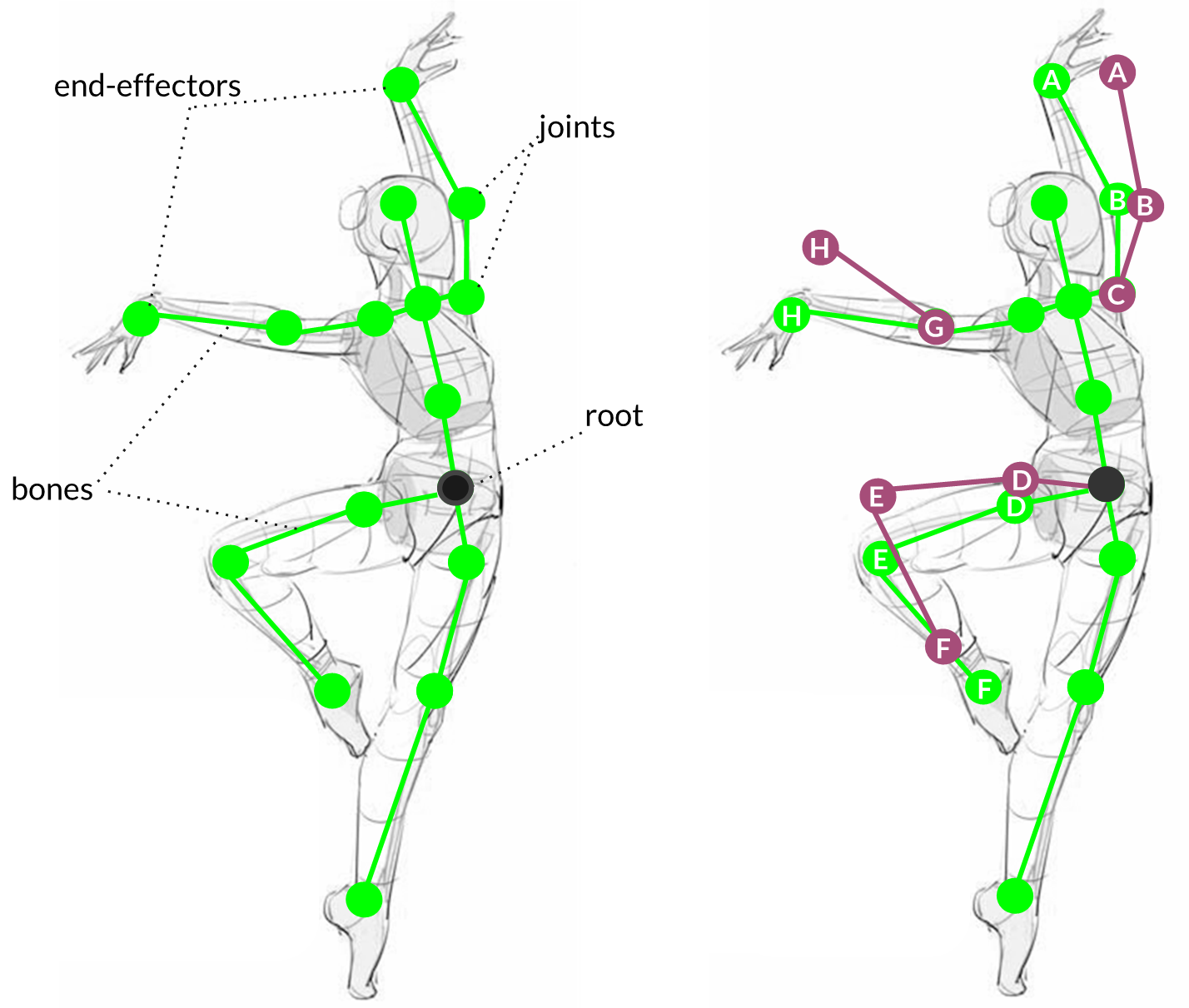

Data-driven skeletal animation relies on the existence of a suitable learning scheme, which can capture the rich context of motion. However, commonly used motion representations often fail to accurately encode the full articulation of motion, or present artifacts. In this work, we address the fundamental problem of finding a robust pose representation for motion, suitable for deep skeletal animation, one that can better constrain poses and faithfully capture nuances correlated with skeletal characteristics. Our representation is based on dual quaternions, the mathematical abstractions with well-defined operations, which simultaneously encode rotational and positional orientation, enabling a rich encoding, centered around the root. We demonstrate that our representation overcomes common motion artifacts, and assess its performance compared to other popular representations. We conduct an ablation study to evaluate the impact of various losses that can be incorporated during learning. Leveraging the fact that our representation implicitly encodes skeletal motion attributes, we train a network on a dataset comprising of skeletons with different proportions, without the need to retarget them first to a universal skeleton, which causes subtle motion elements to be missed. Qualitative results demonstrate the usefulness of the parameterization in skeleton-specific synthesis.

Method

A dual quaternion \( \mathbf{\overline{q}} \) can be represented as an ensemble of ordinary quaternions \( \mathbf{q}_r \) and \( \mathbf{q}_d \), in the form \( \mathbf{q}_r + \mathbf{q}_d\varepsilon \), where \( \varepsilon \) is the dual unit, satisfying the relation \( \varepsilon^2= 0 \). The first quaternion describes the rotation. The second quaternion, \( \mathbf{q}_d \) encodes translational information.We establish the following notation:

| Symbol | Meaning |

|---|---|

| \( \mathbf{q} \) | quaternion |

| \( \mathbf{\overline{q}} \) | dual quaternion |

| \( \mathbf{\hat q} \) | unit quaternion |

| \( \mathbf{\overline{\hat q}} \) | unit dual quaternion |

| \( \mathbf{q^*} \) | quaternion conjugate |

| \( \mathbf{\overline{q}^*} \) | dual quaternion conjugate |

Dual quaternions allow for convenient mappings from and to other representations which are currently used in the literature (quaternions, rotation matrices, ortho6D [Zhou et al., 2018]), allowing for effortless integration into current architectures. They also have well established mathematical properties such as addition and multiplication.

Due to the mathematical properties of dual quaternions we can extract the rotational and positional components. We adopt a current coordinate system, so that the extracted positions correspond to the joint positions with respect to the root joint. We model the root displacement as a separate component. We can express the current transformation of the root joint using local homogeneous coordinates of the form: \[ M{curr,root} = \begin{bmatrix} r{11} &r{12}&r{13}&0\ r{21} &r{22}&r{23}&0\ r{31} &r{32}&r{33}&0\ 0&0&0&1 \end{bmatrix} \]

Then following the tree hierarchy and using the local homogeneous coordinates of each joint ( j ), \[ M{loc,j} = \begin{bmatrix} r{11} & r{12} & r13} & \text{offset}x\ r{21} & r{22} & r{23} & \text{offset}y \ r{31} & r{32} & r{33} & \text{offset}z\ 0 & 0 & 0 & 1 \end{bmatrix} , \] where the \( \text{offset} \) is taken from the hierarchy of the bvh file, we can compute the current homogeneous representation for each joint using \[ M_{curr,j} = M_{curr,(j-1)} \times M_{loc,j} \] Obtaining the local rotations w.r.t. each joint's parent in the tree architecture, as well as the offsets is straightforward when animation files are used. Intuitively, we can recover the local transformation of joint \( j \) using the inverse procedure: \[ M_{loc,j} = M^{-1}_{curr,(j-1)}M_{curr,j} \] With our formulation we take into consideration the hierarchical rig, and overcome problems such as the error accumulation (see Figure below).

SCA 2022 Video

Overview Video & Results

Citation

@article {Andreou:2022,

journal = {Computer Graphics Forum},

title = {Pose Representations for Deep Skeletal Animation},

author = {Andreou, Nefeli and Aristidou, Andreas and Chrysanthou, Yiorgos},

year = {2022},

publisher = {The Eurographics Association and John Wiley & Sons Ltd.},

ISSN = {1467-8659},

DOI = {10.1111/cgf.14632}}